In June 2018, OpenAI published a paper that almost nobody noticed. The title was dry: “Improving Language Understanding by Generative Pre-Training.” The model described had 117 million parameters. It could complete sentences and answer basic questions about text passages.

Five years later, GPT-4 was writing legal briefs, debugging complex software, and passing professional exams that most humans fail. The progression from that 2018 paper to the 2023 multimodal system represents one of the fastest capability jumps in computing history.

But the path was not straight. It included a controversy about “dangerous AI” that looks absurd in hindsight, a scaling bet that many researchers thought was wrong, and a product launch that nobody predicted would become the fastest-growing consumer application ever built.

GPT-1: The Proof Nobody Saw

The original GPT paper arrived during a quiet moment in AI research. Transformers had been introduced a year earlier. Researchers were still figuring out what the architecture could do.

OpenAI’s contribution was conceptual. They showed that you could train a language model on massive amounts of unlabeled text, then fine-tune it for specific tasks with small amounts of labeled data. Train first. Specialize later.

This matters because labeled data is expensive. Someone has to read each example and mark whether it expresses positive or negative sentiment, whether it contains a named entity, whether it answers a question correctly. Getting millions of labeled examples costs real money and takes real time.

Unlabeled text is essentially free. The internet generates it constantly. GPT-1 proved that you could extract useful knowledge from raw text, then apply that knowledge to downstream tasks with minimal additional training.

The model scored 72.8 on GLUE, a benchmark for language understanding. The previous record was 68.9. A meaningful improvement, but not one that suggested the technology would reshape entire industries within half a decade.

Most AI researchers at the time were focused on other approaches. GPT-1 was interesting. It was not obviously world-changing.

GPT-2: The Controversy That Aged Poorly

OpenAI released GPT-2 in February 2019. It had 1.5 billion parameters. That is roughly thirteen times larger than GPT-1. The model could generate coherent paragraphs of text on almost any topic.

Then something unusual happened. OpenAI announced they would not release the full model. The reason: concerns about misuse. The press coverage was immediate. An AI too dangerous to release? Headlines wrote themselves.

The reaction from the technical community was mixed, and looking back years later, the skepticism proved warranted. On Hacker News, user empiko captured what many came to feel: “I remember when GPT-2 was ‘too dangerous’ to release. I am confused why people still take these clown claims seriously.”

Others suspected the framing was strategic. User sva_ noted: “The GPT2 weights have later been released which made some people suspect the ‘too dangerous to release’ stuff was mostly hype/marketing.”

OpenAI eventually released the full model in November 2019, nine months after the initial announcement. The expected flood of AI-generated misinformation did not materialize. At least not from GPT-2.

What matters about GPT-2 is not the controversy. The controversy aged poorly. What matters is that OpenAI demonstrated that scaling worked. A model thirteen times larger performed dramatically better. This was data, not theory. It would inform everything that followed.

GPT-3: When the Skeptics Were Wrong

GPT-3 landed in June 2020. The numbers were staggering. 175 billion parameters. Trained on 570 gigabytes of text. The model was more than a hundred times larger than GPT-2.

Many researchers thought this was wasteful. Bigger models are expensive to train and expensive to run. The assumption was that returns would diminish. You cannot just keep making things larger and expect proportional improvements.

GPT-3 proved this assumption wrong.

The model demonstrated “few-shot learning” in ways that surprised even its creators. You could give it a few examples of a task, and it would figure out the pattern without any fine-tuning. Show it three examples of English sentences translated to French, and it would translate the fourth. Show it three questions with answers, and it would answer the fourth.

When OpenAI opened the API to developers, reactions split sharply. On Hacker News, user denster captured the excitement: “we were just blown away. Very cool!!”

But not everyone was impressed. User Barrin92 pushed back: “All GPT-3 does is generate text…it doesn’t actually understand anything.”

OpenAI’s CEO Sam Altman tried to moderate expectations. “The GPT-3 hype is way too much,” he wrote. “It’s impressive but it still has serious weaknesses.”

He was right about the weaknesses. The model hallucinated confidently. It could not do basic arithmetic reliably. It had no persistent memory between sessions. It sometimes generated toxic or biased content.

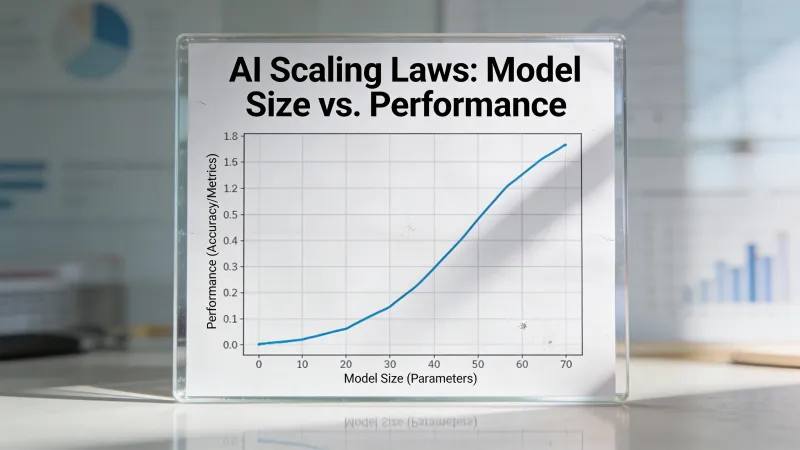

But the scale bet had paid off. Bigger models were smarter models. This insight would drive the next three years of AI development and billions of dollars in investment.

The Missing Ingredient: Making It Usable

GPT-3 existed for two and a half years before ChatGPT launched. The underlying model was not dramatically different. What changed was the interface.

GPT-3 required an API key. You needed to know what a prompt was. You had to understand that the model needed context and examples to perform well. The barrier to entry was real.

ChatGPT removed all of it. Free. Conversational. Optimized through reinforcement learning from human feedback to be helpful and harmless. You just typed and it responded.

Five days after launch, one million users. Two months later, one hundred million. Nothing in consumer technology had grown that fast.

The model people could actually use changed everything about how people thought about AI. Before ChatGPT, AI was something specialists worked with. After ChatGPT, it was something your aunt asked you about at Thanksgiving.

This matters for understanding GPT-4. The technology leap was real, but the adoption leap came from making advanced AI accessible to ordinary people, not from raw capability alone.

GPT-4: The Leap That Proved the Point

OpenAI announced GPT-4 on March 14, 2023. The model could now process images alongside text. You could upload a photo and ask questions about it. You could show it a diagram and request an explanation.

The capability improvements were substantial. GPT-4 passed the bar exam with a score in the 90th percentile. GPT-3.5 had scored in the 10th percentile. That is not incremental improvement. That is a qualitative shift in capability.

On Hacker News, user hooande noted what excited developers: “The ability to dump 32k tokens into a prompt (25,000 words) seems like it will drastically expand the reasoning capability.”

Enterprise adoption accelerated immediately. Stripe integrated GPT-4 to summarize business websites for customer support. Duolingo built it into a new subscription tier. Morgan Stanley created a system to serve financial analysts. Khan Academy developed an automated tutor.

The multimodal capability was genuinely new. Previous language models processed only text. GPT-4 could look at a photograph and describe what was happening, identify objects, read text in images, and reason about visual relationships.

OpenAI withheld technical details about GPT-4’s architecture and training data. The company that once worried GPT-2 was too dangerous to release had become far more secretive about far more powerful technology. The irony was not lost on observers.

What Actually Changed Between Versions

The progression from GPT-1 to GPT-4 involved three fundamental shifts.

Scale. GPT-1 had 117 million parameters. GPT-4’s parameter count was never officially confirmed, but credible estimates place it at over one trillion. That is roughly a ten-thousand-fold increase in six years. Each jump in scale produced capabilities that could not be predicted from smaller models.

Training data. GPT-1 trained on books. GPT-3 added Common Crawl, a massive web scrape. GPT-4’s training data remains undisclosed, but the model demonstrates knowledge that could only come from extensive exposure to code, academic papers, and specialized domains.

Alignment techniques. Raw language models optimize for prediction. They generate whatever text seems statistically likely given the prompt. Reinforcement learning from human feedback, introduced between GPT-3 and ChatGPT, taught the models to optimize for helpfulness and harmlessness instead. This made the technology usable by ordinary people who had no idea how to write prompts.

The architectural changes were less dramatic than commonly assumed. GPT-4 still uses transformers. The attention mechanism is recognizable from the 2017 paper that started everything. The revolution came from scale, data, and training methodology, not from fundamental architectural innovations.

The Numbers Tell the Story

Here is what each version could do, measured by the benchmarks that matter:

GPT-1 scored 72.8 on GLUE. This beat the previous record of 68.9. Meaningful progress. Not transformative.

GPT-2 generated text coherent enough to fool casual readers. It could not follow instructions reliably or maintain context across long conversations.

GPT-3 introduced few-shot learning. Give it examples, and it figures out the pattern. This was the first version that felt genuinely useful for real work, even though the outputs required heavy editing.

GPT-4 passed professional exams. Bar exam: 90th percentile. GRE verbal: 90th percentile. AP exams in multiple subjects: passing scores. This was the first version that consistently outperformed the average human on cognitive benchmarks.

The gap between “interesting research” and “useful tool” happened somewhere between GPT-2 and GPT-3. The gap between “useful tool” and “potential replacement for some human cognitive work” happened somewhere between GPT-3 and GPT-4.

Why GPT-2 Gets More Ink Than GPT-1

Notice that GPT-1 barely registers in most histories. Nobody debates whether GPT-1 should have been released. Nobody remembers what they thought when they first saw GPT-1 outputs.

GPT-2 is different. The “too dangerous” framing created a narrative. People had opinions. The controversy generated coverage that the technical achievement alone would not have.

This matters because it reveals something about how technology enters public consciousness. GPT-1 was important for what it proved technically. GPT-2 was important for the debate it started. GPT-3 was important for being useful. GPT-4 was important for being good enough that people started worrying about jobs.

Each version mattered for different reasons. Understanding those reasons helps you understand what actually drives AI adoption and concern.

The Pattern Worth Understanding

Each GPT version followed a pattern. Technical capability jumped. Public reaction split between excitement and skepticism. Predicted harms either failed to materialize or materialized in unexpected ways. Real applications emerged that nobody anticipated.

The fake news fears around GPT-2 look almost quaint now. The model that was “too dangerous to release” is trivially outperformed by systems anyone can access for free.

The “GPT-3 is not that impressive” takes aged poorly. The model that some dismissed as glorified autocomplete became the foundation for products that hundreds of millions of people use daily.

The concerns about GPT-4 are still unresolved. Whether it represents progress toward beneficial AI or a step toward systems we cannot control depends on who you ask and what time horizon you consider.

What seems clear is that each version made AI more capable and more accessible. The technology that started as a research curiosity in 2018 is now embedded in how millions of people work. The gap between GPT-1 and GPT-4 is the gap between academic proof-of-concept and infrastructure that organizations depend on.

What Comes Next

Understanding this progression matters because it continues. GPT-5 exists. Competing models from Anthropic, Google, and Meta have pushed capabilities further. The pace of improvement shows no signs of slowing.

The track record suggests betting against capability improvements is unwise. The track record also suggests that the impacts, both positive and negative, will differ from predictions.

The only prediction that has consistently held: the next version will be better than the last. How much better, and what that means for how we work and live, remains genuinely unknown.

Six years took us from a paper nobody read to technology that 10% of adults use weekly. The next six years will likely bring changes just as dramatic. Understanding where we came from is the best preparation for figuring out where we are going.