Sometimes you should give the AI examples of what you want. Sometimes you shouldn’t.

This sounds like it should be obvious, but the difference between “zero-shot” and “few-shot” prompting matters more than most people realize. Choosing wrong doesn’t just cost you time. It can actually make your results worse.

The good news: once you understand when examples help and when they hurt, you can make better decisions by default.

The Basic Distinction

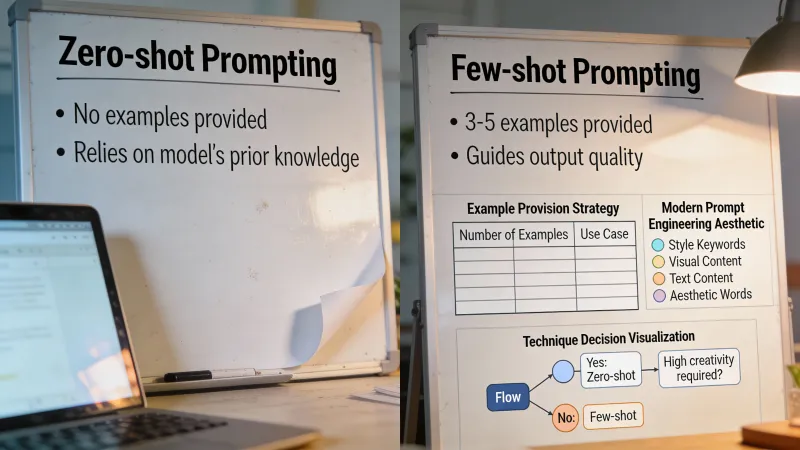

Zero-shot prompting means giving the AI a task with no examples. You describe what you want and let the model figure it out based on its training.

“Classify this customer review as positive, negative, or neutral: ‘The product arrived late but worked perfectly.’”

Few-shot prompting means including examples in your prompt. You show the AI what you want by demonstrating it first.

“Classify these reviews as positive, negative, or neutral:

Review: ‘Absolutely love it, best purchase ever!’ - Positive Review: ‘Broke after two days.’ - Negative Review: ‘It’s okay, nothing special.’ - Neutral

Now classify this review: ‘The product arrived late but worked perfectly.’”

Same task. Different approach. Different results.

IBM’s research on prompting explains why this matters: zero-shot prompting relies entirely on the model’s pre-training to infer appropriate responses. When that pre-training aligns with your task, zero-shot works great. When it doesn’t, examples fill the gap.

When Zero-Shot Works

Zero-shot prompting works best when:

The task is common and well-defined. Summarization, translation, simple classification, general writing tasks. These are tasks the model has seen millions of times during training. It doesn’t need you to explain how summaries work.

You want the model’s natural interpretation. Sometimes you don’t have a specific format in mind. You want to see how the AI approaches something without being constrained by examples.

The task is straightforward. “Write a thank-you email.” “Explain photosynthesis to a 10-year-old.” “List five marketing ideas for a coffee shop.” No examples needed.

You’re working with reasoning-focused models. Here’s something counterintuitive: research on newer reasoning models shows that OpenAI’s o1 series and similar models actually perform worse with examples. These models have sophisticated internal reasoning processes that examples can confuse. They figure things out better when you give them room to think.

Example (zero-shot works well):

“Summarize this 500-word article in 2-3 sentences, focusing on the main argument and conclusion.”

The model knows what summaries look like. No demonstration needed.

When Few-Shot Works Better

Few-shot prompting shines when:

Format matters and is non-obvious. If you want output in a specific structure that the model wouldn’t guess, show it.

Style or tone is particular. “Write in our brand voice” is vague. Showing three examples of your brand voice is concrete.

The task is unusual or domain-specific. Extracting specific data from legal documents, classifying technical terms in a niche field, reformatting content in a custom way. These aren’t tasks the model has seen often.

Consistency across outputs matters. If you’re generating 50 product descriptions and they all need to match, examples create that pattern.

The Prompt Engineering Guide notes that few-shot prompting enables “in-context learning” where demonstrations condition the model for subsequent examples. You’re essentially teaching the model your requirements through demonstration rather than explanation.

Example (few-shot works better):

“Convert these meeting notes into action items:

Notes: ‘Discussed Q3 targets. Sarah to update forecast by Friday. Need to hire two more reps. John handling job posts.’ Action items:

- Sarah: Update Q3 forecast (due: Friday)

- John: Create and post job listings for 2 sales rep positions

Notes: ‘Reviewed campaign performance. Maria to pull detailed analytics. Budget reallocation needed. Tom to draft proposal.’ Action items:

- Maria: Pull detailed campaign analytics

- Tom: Draft budget reallocation proposal

Now convert these notes: ‘Sprint review went well. Bug with payment system needs fix. Alex investigating. Design team needs copy for new landing page by Wednesday.’”

Without examples, the model might format action items differently, include different levels of detail, or miss the deadline notation pattern. The examples create a template.

The Research: How Many Examples?

Studies on few-shot prompting consistently find diminishing returns after 2-3 examples. Research compiled by prompting experts shows performance plateaus around 4-5 demonstrations. More examples burn tokens without meaningful accuracy gains.

The sweet spot for most tasks: 2-5 examples.

But here’s the catch: quality beats quantity. Studies have shown that example selection matters more than example count. Poorly chosen examples can actually hurt performance compared to no examples at all.

What makes examples effective:

- They’re diverse, covering different scenarios within the task

- They represent the actual distribution of inputs you’ll see

- They’re clear and correct

- They match the complexity level you expect

Bad example set: Three nearly identical examples that all follow the same pattern.

Good example set: Three examples that show different variations, like short vs. long inputs, simple vs. complex cases, or different edge conditions.

Order and Format Matter

Research reveals something surprising: example order dramatically affects performance. Optimal sequences achieve near state-of-the-art results while poor ordering drops to chance levels.

Some guidelines:

- Put your strongest, clearest example first

- Vary the examples rather than grouping similar ones

- End with an example closest to your actual task

- Keep examples consistent in format with each other

Here’s the really weird finding: even random labels in proper format outperform no examples at all. The format itself teaches the model what you expect. Label accuracy matters less than consistent structure.

This doesn’t mean you should use wrong examples. But it shows that format and structure carry more weight than you might think.

When Both Approaches Fail

Sometimes neither zero-shot nor few-shot gets you where you need to go.

IBM’s prompting research identifies scenarios where both fail: complex multi-step reasoning, arithmetic problems, tasks requiring logical deduction across many steps.

For these, you need different techniques entirely. Chain-of-thought prompting asks the model to reason step by step. Tree-of-thoughts explores multiple reasoning paths. These approaches work at a different level than simply providing examples.

If you’re finding that examples don’t help and the task is complex, the answer usually isn’t “more examples.” It’s a different prompting strategy.

Practical Decision Framework

When facing a new task, run through these questions:

1. Is this a common task the model has seen before? Yes = try zero-shot first. Summarization, translation, basic writing, simple classification. No = lean toward few-shot.

2. Does specific format or style matter? Yes = few-shot. Show the format you want. No = zero-shot is probably fine.

3. Am I using a reasoning-focused model (like o1)? Yes = favor zero-shot. Examples can interfere with these models’ reasoning. No = few-shot when useful.

4. Do I need consistency across many outputs? Yes = few-shot. The examples create a template. No = zero-shot is simpler.

5. Is the task unusual or domain-specific? Yes = few-shot. The model needs guidance. No = zero-shot likely works.

Real-World Scenarios

Let’s walk through some common situations.

Writing Marketing Emails

Scenario: You need five different email subject lines for a product launch.

Best approach: Zero-shot, usually. Email subject lines are common. The model knows what they look like.

“Write 5 email subject lines for launching a new wireless earbuds product. Target audience is fitness enthusiasts. Emphasize battery life and sweat resistance.”

When to switch to few-shot: If you have a specific style that’s not obvious, like always starting with a verb, or always using numbers.

Extracting Data from Documents

Scenario: You need to pull specific fields from customer feedback forms.

Best approach: Few-shot. Document extraction formats are particular.

"Extract customer information from these feedback forms:

Form: 'Name: John Smith. Email: [email protected]. Rating: 4/5. Comment: Great product but slow shipping.'

Output: {'name': 'John Smith', 'email': '[email protected]', 'rating': 4, 'comment': 'Great product but slow shipping'}

Form: 'Sarah Johnson ([email protected]) gave us 5 stars! Said she loves the new features.'

Output: {'name': 'Sarah Johnson', 'email': '[email protected]', 'rating': 5, 'comment': 'loves the new features'}

Now extract from: 'Mike Davis, [email protected], 3 out of 5 stars, noted that the interface is confusing.'"The examples show how to handle different formats in the input. Without them, the model might structure the output differently each time.

Content Classification

Scenario: Categorizing support tickets by urgency.

Best approach: Could go either way. Start zero-shot, switch to few-shot if results are inconsistent.

Zero-shot attempt:

“Classify this support ticket as Low, Medium, or High urgency: ‘I can’t log into my account. I have a meeting in 10 minutes where I need to present.’”

If the model’s urgency judgments don’t match yours, add examples that show your criteria.

Translating Technical Content

Scenario: Translating documentation from English to Spanish.

Best approach: Zero-shot. Translation is a core capability.

“Translate this API documentation section to Spanish, maintaining technical accuracy and the same formatting.”

When to switch: If you have specific terminology preferences or a glossary that must be followed.

Analyzing Sentiment in Industry-Specific Context

Scenario: Analyzing sentiment in financial news headlines.

Best approach: Few-shot. Financial sentiment is different from general sentiment. “Stock drops 5%” might be neutral or negative depending on context.

Show examples of how you interpret financial language:

“‘Company beats earnings expectations’ - Positive ‘Merger talks collapse’ - Negative ‘Q3 results match analyst forecasts’ - Neutral ‘FDA approves new treatment’ - Positive

Classify: ‘Interest rate decision delayed until next month‘“

The Hybrid Approach

Sometimes the best solution combines zero-shot instructions with few-shot examples.

You explain what you want (zero-shot style), then show examples of it (few-shot style). This gives the model both the reasoning and the pattern.

“I need you to rewrite customer reviews to remove identifying information while keeping the sentiment and specific feedback intact. Names should become generic placeholders. Company-specific details should be generalized.

Example: Original: ‘John from Acme Corp said your widget saved us 3 hours daily!’ Rewritten: ‘[Customer] from [Company] said your product saved them 3 hours daily!’

Original: ‘The Smith family from Portland loves the new features but found setup confusing.’ Rewritten: ‘[Customers] from [Location] loves the new features but found setup confusing.’

Now rewrite: ‘Maria at TechStart Inc. emailed saying our software integration cut their processing time in half.’”

The explanation provides context. The examples demonstrate execution. Together, they’re more effective than either alone.

Testing Your Choice

When you’re unsure which approach to use, test both.

Run the same task zero-shot and few-shot. Compare results. See which produces output closer to what you need.

This takes a few extra minutes but prevents you from committing to an approach that doesn’t work for your specific task. What works for one domain might not work for another.

Pay attention to:

- Format consistency

- Accuracy on edge cases

- Whether the model seems to understand your requirements

- Token efficiency (few-shot uses more tokens)

Over time, you’ll develop intuition for which approach fits which tasks. The decision becomes faster.

Key Takeaways

Zero-shot works when:

- Tasks are common and well-defined

- You want the model’s natural interpretation

- The task is straightforward

- You’re using reasoning-focused models

Few-shot works when:

- Format or style matters and isn’t obvious

- The task is unusual or domain-specific

- You need consistency across outputs

- The model’s default interpretation doesn’t match what you need

Best practices for few-shot:

- Use 2-5 diverse examples

- Example quality beats quantity

- Order matters; put clearest examples first

- Keep format consistent across examples

When both fail, look at chain-of-thought prompting for complex reasoning or advanced prompt patterns for sophisticated tasks.

The goal isn’t to always use one approach. It’s to know when each approach makes sense for your specific task.