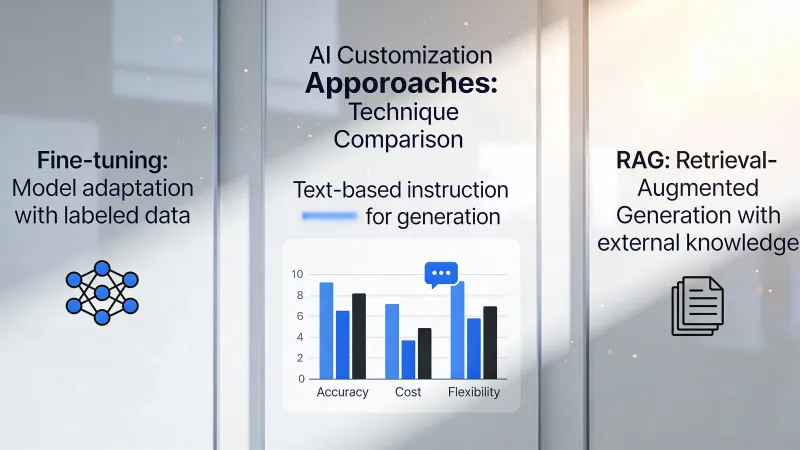

You want AI to work with your specific data, follow your specific style, or handle your specific use case. There are three main ways to make that happen: prompt engineering, RAG, and fine-tuning.

Each solves different problems. Choosing wrong means wasting time and money on an approach that doesn’t fit. Here’s how to pick.

The Quick Version

According to IBM’s technical comparison, prompt engineering, fine-tuning, and RAG are three optimization methods for getting more value from LLMs. All three change how the model behaves, but which one to use depends on your use case and resources.

Prompt engineering: Craft instructions that guide the model toward better output. Fast, cheap, no technical expertise required. Limited by what the model already knows.

RAG (Retrieval-Augmented Generation): Connect the model to your documents so it can look things up. Good for factual accuracy and current information. Requires setting up a knowledge base.

Fine-tuning: Train the model on your data to change how it thinks and responds. Expensive, technically complex, but produces deep customization.

Let’s dig into each.

Prompt Engineering: Start Here

Prompt engineering is crafting instructions that guide the model toward the output you want. You’re not changing the model. You’re changing what you ask it.

TechTarget’s comparison explains that prompt engineering does not significantly alter a model’s parameters. It just gives better instructions.

What it’s good for:

- Open-ended creative tasks (content generation, brainstorming)

- Testing whether AI can solve your problem at all

- Tasks where the model’s existing knowledge is sufficient

- Getting started quickly without infrastructure

What it can’t do:

- Give the model knowledge it doesn’t have

- Consistently enforce specific behaviors across every response

- Dramatically change the model’s writing style or reasoning approach

Cost: Essentially free beyond your normal AI tool subscription. Time investment for iteration, but no infrastructure or training costs.

Effort to implement: Hours to days. Anyone can do this.

We cover this in detail in our article on better prompts in 5 minutes.

When Prompt Engineering Is Enough

For many business use cases, good prompts are all you need.

Need the model to write in a specific tone? Describe that tone clearly and provide examples. Need structured output? Specify the exact format you want. Need it to follow a process? Write out the steps.

According to K2view’s analysis, prompt engineering is the most flexible approach and shines in open-ended situations with diverse outputs, such as content generation from scratch.

The limitation comes when you need knowledge the model doesn’t have, or when prompt instructions get so complex they’re hard to maintain.

RAG: When You Need Your Data

Retrieval-Augmented Generation connects the model to an external knowledge base. When you ask a question, the system retrieves relevant documents and includes them in the prompt. The model generates its answer based on your actual data.

As we explain in our RAG explainer, RAG addresses three core problems: knowledge cutoffs, lack of access to private data, and hallucination risk.

What it’s good for:

- Customer support using your documentation

- Internal knowledge bases and wikis

- Any task requiring up-to-date or proprietary information

- Situations where you need to cite sources

What it can’t do:

- Change how the model reasons or writes

- Teach the model complex new skills

- Work without a knowledge base to retrieve from

Cost: Moderate. Requires setting up and maintaining a vector database, embedding your documents, and running retrieval. Cloud services make this easier but charge for storage and queries.

Effort to implement: Days to weeks depending on complexity. Requires some technical setup but not data science expertise.

When to Choose RAG

Monte Carlo’s comparison recommends RAG when factual accuracy and up-to-date knowledge are crucial. It’s ideal when facts change frequently, answers need to cite sources, and you need to integrate content across systems.

The transparency matters too. RAG reduces hallucinations and keeps knowledge outside the model, making responses traceable to specific documents.

If your main problem is “the AI doesn’t know about our specific stuff,” RAG is almost certainly the answer.

Fine-Tuning: When You Need Deep Customization

Fine-tuning modifies the model itself. You train it on specialized data, adjusting its internal weights to change how it processes information and generates output.

According to Heavybit’s enterprise guide, fine-tuning enables training the model to adhere to specific output formatting, function calling, JSON output, or consistent voice across all interactions.

What it’s good for:

- Consistent behavior that can’t be achieved with prompts

- Specific output formats that must be exact every time

- Domain expertise the model needs to internalize

- Reducing latency by training a smaller model to do what a larger model does

What it can’t do:

- Give the model access to current information (it still has a knowledge cutoff)

- Update easily when your data changes

- Work without substantial training data

Cost: High. According to cost analyses:

- Small models (2-3B parameters): $300-$700

- Medium models (7B): $1,000-$3,000 with efficient methods, up to $12,000 with full fine-tuning

- Larger models (13B+): $2,000-$35,000+ depending on approach

And that’s just training. Data preparation often adds 20-40% to total costs. Plus ongoing costs to retrain as your needs change.

Effort to implement: Weeks to months. Requires data science expertise, quality training data, and infrastructure.

When to Choose Fine-Tuning

According to Alpha Corp’s analysis, you should invest in fine-tuning when prompts plateau and you need consistent domain behavior, strict formats, or lower latency at scale.

Microsoft’s fine-tuning guidance identifies three areas where training beats longer prompts:

- Consistent schema generation and tool use

- Reliable tone and style

- Lower latency through shorter inputs or smaller models

Kadoa’s 2025 assessment adds that for non-English languages, especially in specialized domains, fine-tuning can dramatically improve performance over off-the-shelf models.

The Risks of Fine-Tuning

Fine-tuning has genuine risks that prompt engineering and RAG don’t:

Overfitting: The model can overindex on training data and fail on new inputs it hasn’t seen before. This is a significant risk with smaller training datasets.

Catastrophic forgetting: The model might overwrite general knowledge learned in original training. Your specialized model could become worse at general tasks.

Safety degradation: Research shows that fine-tuning can compromise built-in safety guardrails. Poorly done, it can enable harmful outputs.

Maintenance burden: When your needs change, you need to fine-tune again. Unlike RAG where you just update documents, fine-tuning requires retraining.

The Decision Framework

Here’s how to think through which approach to use:

Start with Prompt Engineering

Always start here. Even if you end up needing RAG or fine-tuning, good prompts improve everything.

Test whether the model can do what you need with well-crafted prompts. If yes, you’re done. If no, identify what’s missing.

Add RAG If…

You determine the problem is knowledge, not capability:

- The model gives outdated information

- It doesn’t know about your specific products, policies, or data

- You need responses to cite specific sources

- Your underlying information changes frequently

As one practical guide notes, for internal corporate data without special formatting, RAG is usually the right choice.

Consider Fine-Tuning If…

Even with good prompts and RAG, you’re hitting limitations:

- Output format or style is inconsistent no matter how you prompt

- You need a specific behavior across all interactions

- Latency matters and you need a smaller, faster model

- You’re processing high volumes where prompt tokens cost too much

- The domain requires specialized reasoning the model doesn’t have

And critically: you have the budget, the expertise, and quality training data.

Combining Approaches

These aren’t mutually exclusive. Production systems often use all three.

According to orq.ai’s technical guide, RAG and fine-tuning can be used together. Fine-tune the model for how to think and respond. Use RAG to supply what to say for the current question. The model stays consistent and the facts stay fresh and auditable.

A common pattern:

- Prompt engineering establishes base instructions and guardrails

- RAG provides relevant documents for each query

- Fine-tuning ensures consistent style and specialized capabilities

Think of it as: prompts for instructions, RAG for knowledge, fine-tuning for behavior.

The Cost Reality

Let’s be concrete about costs:

Prompt engineering: $0 beyond your existing AI subscription. Maybe $50-500 for a prompt engineering course or consultant if you want outside help.

RAG: Vector database hosting ($50-500/month depending on scale). Embedding costs (~$0.0001 per 1000 tokens for OpenAI embeddings). Development time to set it up. Total: probably $200-2,000/month for a moderate implementation.

Fine-tuning: According to industry analyses, GPU pricing ranges from $0.50 to $2+ per hour. A full fine-tuning project including data prep, training, testing, and deployment can run $5,000-50,000+ for enterprise use cases.

Small model fine-tuning can cost under $10 with efficient techniques, but that assumes technical expertise to implement. Most businesses need outside help or significant internal data science resources.

What the Market Is Doing

The trend is toward smarter combinations rather than pure approaches.

According to 2025 industry assessments, organizations with mature fine-tuning capabilities gain competitive advantages through AI systems that understand their unique business context.

But practical analysis shows the real money is in fine-tuned smaller models for specific tasks. The Small Language Model market hit $6.5 billion in 2024 with projections of $20+ billion by 2030.

The pattern: use large general models via API for experimentation and diverse tasks. Fine-tune smaller models for high-volume, specific operations where costs and latency matter.

Practical Recommendations

If you’re just getting started: Invest in prompt engineering. Get good at it. Most use cases won’t need anything more.

If you need company-specific knowledge: Implement RAG. It’s the clearest path to AI that knows your stuff without massive investment.

If you have a specific, high-volume use case with consistent requirements: Consider fine-tuning a smaller model. The ROI math works when you’re running thousands of similar queries daily.

If you’re not sure: Start with prompts. Add RAG when you hit knowledge limitations. Only explore fine-tuning when you have clear evidence that the other approaches aren’t working and you have the resources to do it right.

The biggest mistake is jumping to fine-tuning because it sounds more sophisticated. It’s harder, more expensive, and often unnecessary. The simplest approach that works is usually the right one.